This post is going to attempt to demystify the several layers of networking operating in a Kubernetes cluster. Kubernetes is a powerful platform embodying many intelligent design choices, but discussing the way things interact can get confusing: pod networks, service networks, cluster IPs, container ports, host ports, node ports… I’ve seen a few eyes glaze over. We mostly talk about these things at work, cutting across all layers at once because something is broken and someone wants it fixed. If you take it a piece at a time and get clear on how each layer works it all makes sense in a rather elegant way.

In order to keep things focused, I’m going to split the post into three parts. This first part will look at containers and pods. The second will examine services, which are the abstraction layer that allows pods to be ephemeral. The last post will look at ingress and getting traffic to your pods from outside the cluster. A few disclaimers first. This post isn’t intended to be a basic intro to containers, Kubernetes, or pods. To learn more about how containers work to see this overview from Docker. A high-level overview of Kubernetes can be found here, and an overview of pods specifically is here. Lastly, a basic familiarity with networking and IP address spaces will be helpful.

Pods

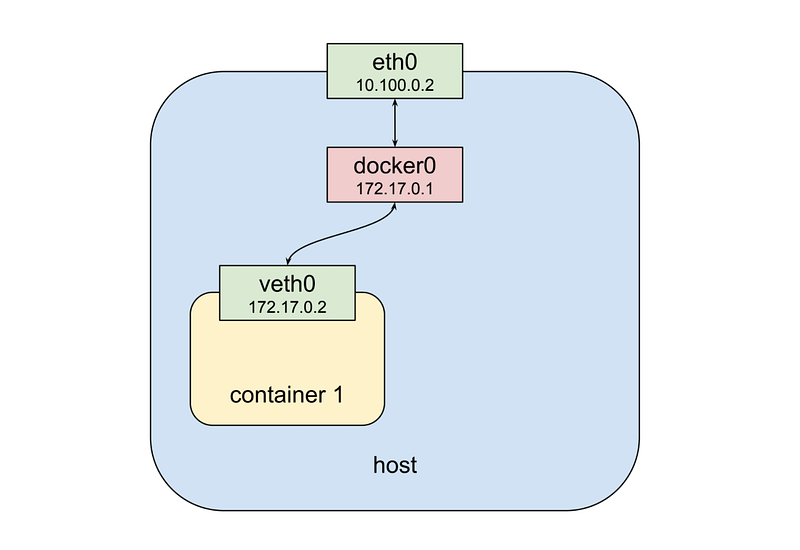

So what is a pod? A pod consists of one or more containers that are collocated on the same host, and are configured to share a network stack and other resources such as volumes. Pods are the basic unit Kubernetes applications are built from. What does “share a network stack” actually mean? In practical terms, it means that all the containers in a pod can reach each other on the localhost. If I have a container running nginx and listening on port 80 and another container running scrapyd the second container can connect to the first as http://localhost:80. But how does that really work? Let's look at the typical situation when we start a docker container on our local machine:

From the top down we have a physical network interface eth0. Attached to that is a bridge docker0, and attached to that is a virtual network interface veth0. Note that docker0 and veth0 are both on the same network, 172.17.0.0/24 in this example. On this network, docker0 is assigned 172.17.0.1 and is the default gateway for veth0, which is assigned 172.17.0.2. Due to how network namespaces are configured when the container is launched processes inside it sees only veth0 and communicates with the outside world through docker0 and eth0. Now let’s launch a second container:

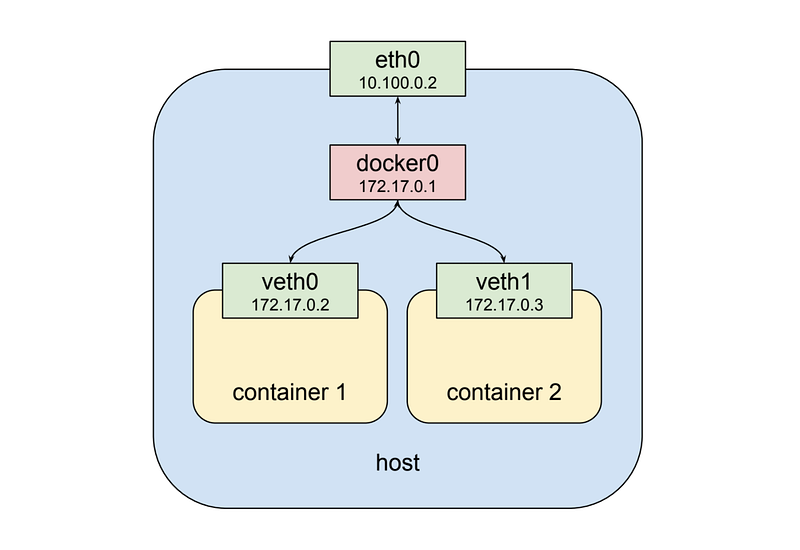

As shown above, the second container gets a new virtual network interface veth1, connected to the same docker0 bridge.* This interface is assigned 172.17.0.3, so it is on the same logical network as the bridge and the first container, and both containers can communicate through the bridge as long as they can discover the other container’s IP address somehow.

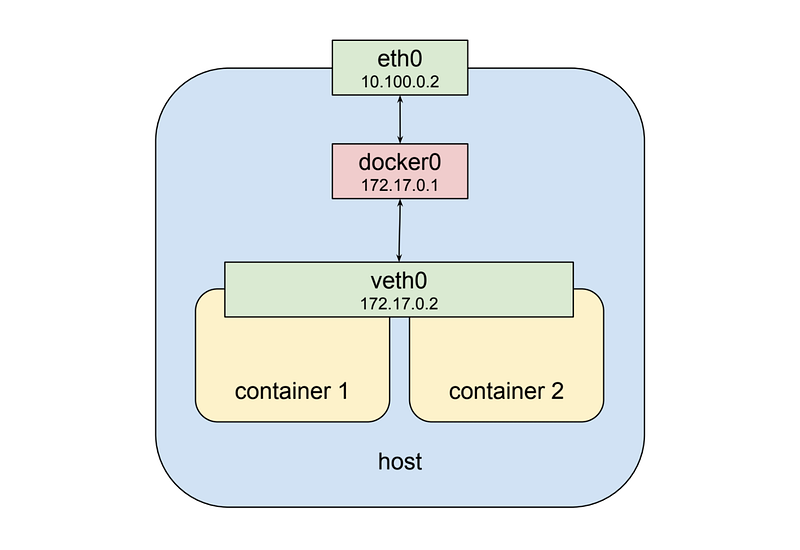

That’s fine and all but it doesn’t get us to the “shared network stack” of a Kubernetes pod. Fortunately, the namespaces are very flexible. Docker can start a container and rather than creating a new virtual network interface for it, specify that it shares an existing interface. In this case, the drawing above looks a little different:

Now the second container sees veth0 rather than getting its own veth1 as in the previous example. This has a few implications: first, both containers are addressable from the outside on 172.17.0.2, and on the inside, each can hit ports opened by the other on localhost. This also means that the two containers cannot open the same port, which is a restriction but no different than the situation when running multiple processes on a single host. In this way, a set of processes can take full advantage of the decoupling and isolation of containers, while at the same time collaborating together in the simplest possible networking environment.

The Pod Network

That’s all pretty cool, but one pod full of containers that can talk to each other does not get us a system. For reasons that will become even clearer in the next post where I discuss services, the very heart of Kubernetes’ design requires that pods be able to communicate with other pods whether they are running on the same localhost or separate hosts. To look at how that happens we need to step up a level and look at nodes in a cluster. This section will contain some unfortunate references to network routing and routes, a subject I realize all of humanity would prefer to avoid. Finding a clear, brief tutorial on IP routing is difficult, but if you want a decent review of Wikipedia's article on the topic isn’t horrible.

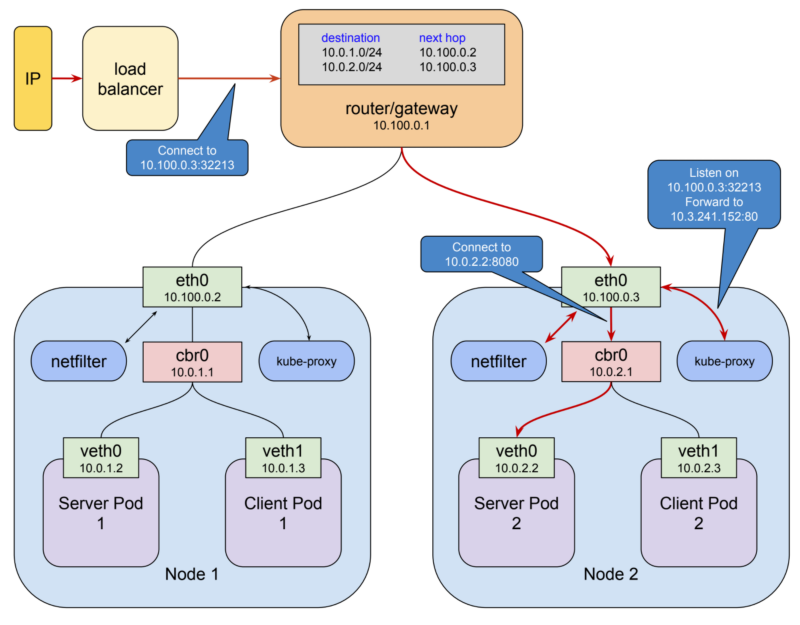

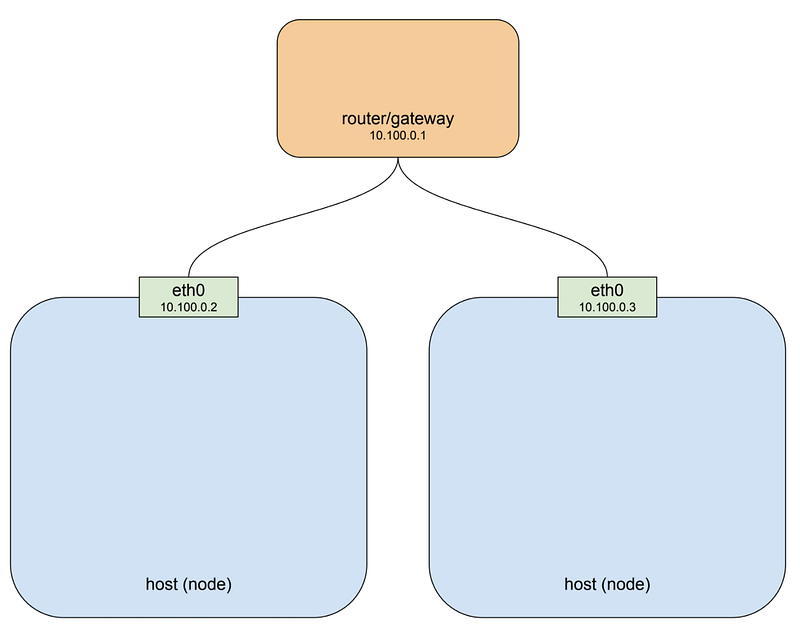

A Kubernetes cluster consists of one or more nodes. A node is a host system, whether physical or virtual, with a container runtime and its dependencies (i.e. docker mostly) and several Kubernetes system components, that are connected to a network that allows it to reach other nodes in the cluster. A simple cluster of two nodes might look like this:

If you’re running your cluster on a cloud platform like GCP or AWS that drawing pretty well approximates the default networking architecture for a single project environment. For the purposes of illustration, I’ve used the private network 10.100.0.0/24 for this example, so the router is 10.100.0.1 and the two instances are 10.100.0.2 and 10.100.0.3 respectively. Given this setup, each instance can communicate with the other on eth0. That’s great, but recall that the pod we looked at above is not on this private network: it’s hanging off a bridge on a different network entirely, one that is virtual and exists only on a specific node. The host on the left has interface eth0 with an address of 10.100.0.2, whose default gateway is the router at 10.100.0.1. Connected to that interface is bridge docker0 with an address of 172.17.0.1, and connected to that is interface veth0 with address 172.17.0.2. The veth0 interface was created with the pause container and is visible inside all three containers by virtue of the shared network stack. Because of local routing rules set up when the bridge has created any packet arriving at eth0 with a destination address of 172.17.0.2 will be forwarded to the bridge, which will then send it on to veth0. Sounds ok so far. If we know we have a pod at 172.17.0.2 on this host we can add rules to our router setting the next hop for that address to 10.100.0.2 and they will get forwarded from there to veth0.

The host on the right also has eth0, with an address of 10.100.0.3, using the same default gateway at 10.100.0.1, and again connected to it is the docker0 bridge with an address of 172.17.0.1. Hmm. That’s going to be an issue. Now, this address might not actually be the same as the other bridge on host 1. I’ve made it the same here because that’s a pathological worst case, and it might very well work out that way if you just installed docker and let it do its thing. But even if the chosen network is different this highlights the more fundamental problem which is that one node typically has no idea what private address space was assigned to a bridge on another node, and we need to know that if we’re going to send packets to it and have them arrive at the right place. Clearly some structure is required.

Kubernetes provides that structure in two ways. First, it assigns an overall address space for the bridges on each node, and then assigns the bridge's addresses within that space, based on the node the bridge is built on. Secondly, it adds routing rules to the gateway at 10.100.0.1 telling it how packets destined for each bridge should be routed, i.e. which node’s eth0 the bridge can be reached through. Such a combination of virtual network interfaces, bridges, and routing rules is usually called an overlay network. When talking about Kubernetes I usually call this network the “pod network”

0 Comments