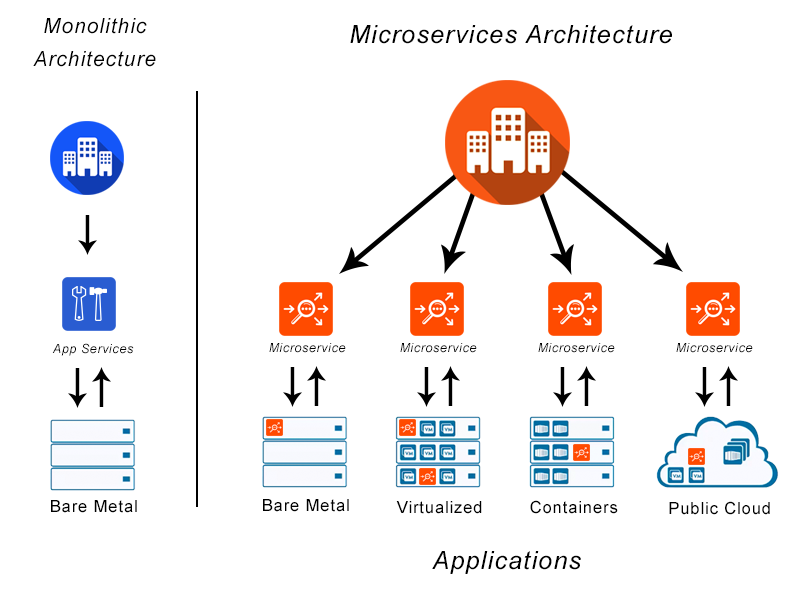

The name Kubernetes comes from a Greek word and this is a word for helmsman or vessel pilot. Kubernetes is commanding a ship of containers for our applications. Kubernetes began as a secret project funded by Google called Brog. brog Eventually evolved into Kubernetes, powered by many of the Borg support staff. In 2015 Google donated the project to a community called Cloud Native Computing Foundation (CNCF). Kubernetes solves the problem of the application monolith, which is defined as a software product running on dedicated hardware. Monoliths are expensive and quickly drift to legacy hardware. Upgrades to virtual environments and Clouds are expensive and complicated. The advent of microservices and APIs such as RESt services and Service Oriented Architecture (SOA) and the Cloud has made the monolith obsolete and unnecessary.

Kubernetes microservices include:

- Deployment - Application Distribution

- Sensitive Information - Certificates, Password

- Registration and scaling

- Load Balancing-Managing requests, data load, traffic, and computing processing power for running applications.

- Job scheduling and Management.

- DevOps

- Continuity and Fault Tolerance

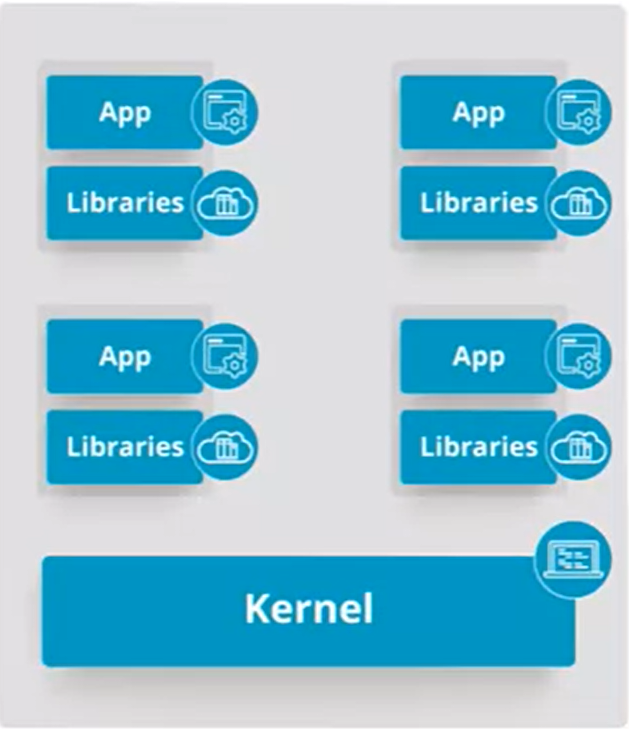

Before we go further it is important to discuss Container technologies. Kubernetes and other products host applications running in Containers. Containers are a solution for reliably deploying to and running software products in different computing environments. Containers are standardized packages consisting of an application and the items needed to execute it, including runtimes, system tools, libraries, and settings.

Also, multiple containers can run on the same operating system(OS)and share the OS kernel. The application and dependencies are packaged in their own container. The difference is supporting infrastructure are abstracted away. The underlying infrastructure only has to run the containers.

Container management

One of the Kubernetes key features is Container management or Orchestration.

Containers :

- Are not unique to Kubernetes.

- Are application-centric, Platform-as-a-Service (PaaS) operating system (OS) level virtualized packages that include their own microservices, and other dependencies.

- Run in isolation from one another, and do not interact

- Run with their own set of services

- Are not full virtual machines

All Containers connect to and run using the same OS kernel.

Container Orchestration

The automatic process of managing or scheduling the work of individual containers for applications based on microservices within multiple clusters. Following are the Container orchestration supports,

- Fault-Tolerance: Business continuity

- Optimization

- real-time scalability

- Automatic discovery of other Containers

- On-lines updates, recovery, and rollbacks without downtime

Kubernetes is an open-source container orchestration tool. however, Amazon Web services, Azure, Docker, and other vendors also offer Containers Orchestration solution.

Deployment and installation of the container orchestrators can be performed on many types of infrastructure. Organizations and users can almost always select the environment with which they are most familiar: “bare metal”, virtual machines, private or public Clouds. A very popular model is installing Kubernetes on infrastructure as a service (IaaS) solution like Amazon Web Services, Google, etc. Kubernetes can be installed quite readily on these laaS solutions with only a few commands.

Kubernetes features

Some Kubernetes features for supporting Container Orchestration:

- Fault tolerance / Self-healing -As a multi-node cluster solution, Kubernetes can automatically backup and replace containers in failed nodes.

- Automatic Bin Packaging -Tomaximize resource utilization.

- Horizontal Scaling -Kubernetes can dynamically add containers as needed based on processing utilization rules and criteria

- In Kubernetes, Clusters run Pods -Each Pod receives its own IP address, However, all containers communicate using localhost to support load balancing across the containers set.

- Automatic Recovery and Rollbacks -Kubertnets saves application and environment configuration version. When needed, Kubernetes can restore or rollback to a stable version of the app or/or the environment configuration.

- Secret and Configuration management -Kubernetes manages application secrets and configuration data separately to avoid extensive image rebuilds. Secrets consist of confidential information passed to the application without revealing the sensitive content to the stack configuration.

- Storage Orchestration -Kubernetes automatically mounts storage for containers from local appliance storage or virtual storage.

- Batch Execution -Kubernetes supports batch operations, long-running processes, and container failover.

Kubernetes has a flexible modular architecture, that supports plugins and microservice APIs. Kubernetes is extensile and can be augmented by writing custom software programs, API calls, plugins, etc. Kubernetes is supported by a community of more than 2,000 contributors. The Kubernetes community also includes local groups such as meet-ups and interest Groups focused on scaling, networking, and other features.

For Kubernetes, the CNCF offerings include:

- Licensing and proper use.

- Scanning for vendor code

- Marketing and conferences

- Legal guidance

- Certification standards

- Other items

The Kubernetes host, the Cloud Native Computing Foundation (CNCF) is part of the Linux foundation. The CNCF also host several generally available(GA) products, and incubating projects. Besides Kubernetes, the CNCF’s GA products include Prometheus for monitoring, Fluentd for logging, and several others.

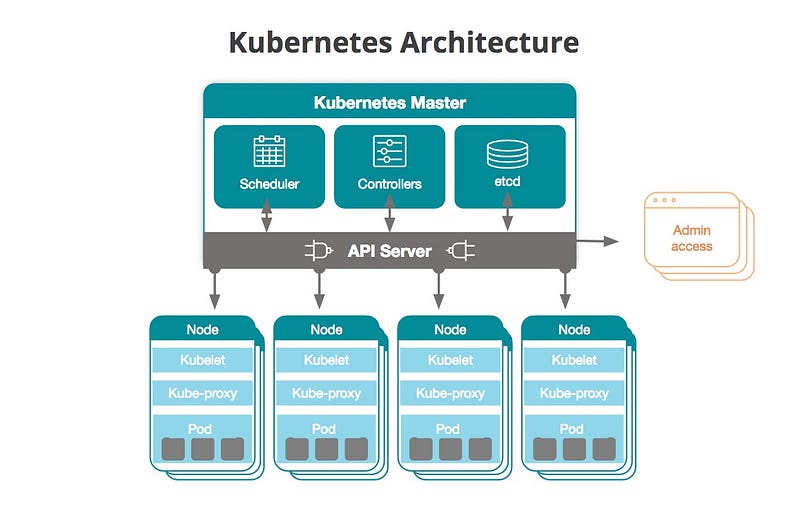

Kubernetes Architecture

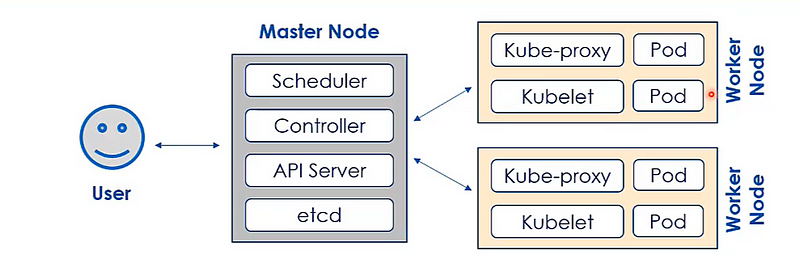

In general, Kubernetes Architecture consists of three major tiers :

- The User Interface

- The Master node

- Worker Node

- The etcd or Key store

Kubernetes is a node-based architecture, with two types of nodes.

1. Master Node:

The master node provides connections to the user interface (UI) and executes the control plane service. the control plane services manage and operate the Kubernetes cluster. Each of the control plane services run with distinct roles in cluster operation. the UI includes a command-line interface (CLI), a web dashboard, and an API. Since the Kubernetes Master node runs the cluster, downtime is not tolerated. For redundancy, a replication strategy is used for the Master node. the master node replicas are added to the cluster in High Availability (HA)mode. State data for the cluster is stored in the etcd which is part of the key store. HA mode keeps all master nodes replicas synchronized. If the currently active Master nodes go offline, a replica will start up to continue operating the master node. the master node runs 4 components. They are the API server, the scheduler, the controller, and etcd, or key store.

The API server manages all administrative tasks in the master node. The API server receives users' REST calls. The API server accesses the state data from the etcd. After the completion of any API processing, the new state of the cluster is written to the etcd, key-store. The API server can be configured and customized.

The Scheduler assigns objects to the nodes. Node assignment is largely based on the Kubernetes cluster's current state and the object's runtime requirements. As expected, the scheduler queries the state of the cluster from the etcd, and new object requirements from the objects configuration files. The scheduler is also very configurable and runs complex operations in multi-node clusters.

The Controller component manages the state of the Kubernetes cluster and the currently running pods. The controller manages a configuration called a DEployments. A Pod is a group of one or more containers. The controller acts when pods go offline. The optimal cluster state or required number of running pods is configured into the controller.

The etcd is the key-value repository where Kubernetes stores the clusters state. As stated, earlier, only the Controller can access the etcd key-store. The etcd can run in HA-mode to support fault tolerance.

Worker Node

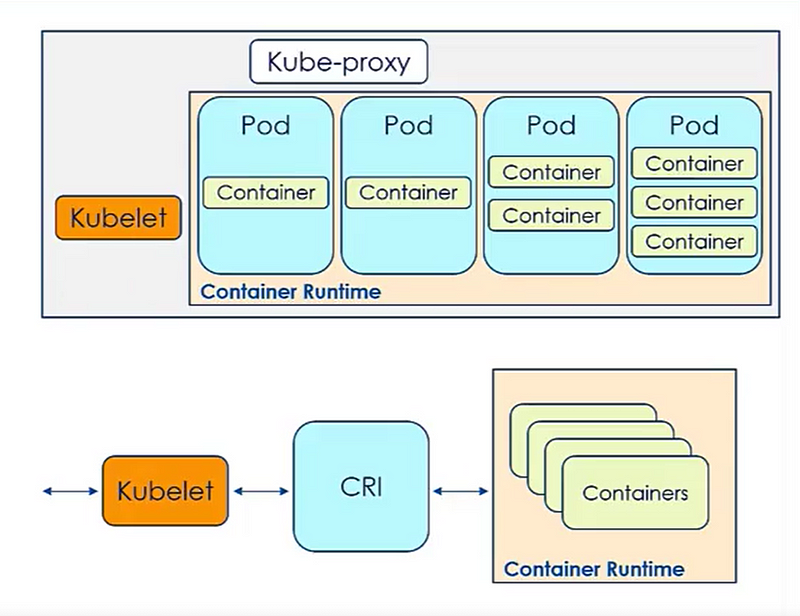

Just as master nodes, Worker nodes have four components:

- Container runtime

- Kubelet

- Kube-proxy

- Plugins for DNS, Dashboard, cluster monitoring, and cluster logging.

The worker node is the runtime for the application. Through containerization and microservices, the applications run out of pods. Pods are managed on worker nodes, where they consume computing resources such as memory, storage, networking.

The Kubelet is a service that communicates with the master node and the containers. The kubelet connects to the container using a container runtime interface(CRI)

Kubernetes is a container orchestration engine, however, interestingly enough Kubernetes does not directly execute containers. Kubernetes needs a container runtime, on a node where it is executing a pod and containers. Kubernetes supports several container runtimes including;

- Docker: a very popular runtime for Kubernetes

- CRI-O: Considered a lightweight container runtime

- Containerd: A very general Container runtime built for many solutions

- rklet : Apod native Container runtime.

The Kube-proxy is the networking agent for each node. The plugins and Addons:

- DNS -cluster DNS is a DNS server required to assign DNS records to Kubernetes resources.

- Dashboard -general purposed web-based user interface for cluster management

- Monitoring-Collects cluster level container metrics

- Logging — collects cluster level container logs.

0 Comments